Table of Contents

Background

Over the years I have worked in network security, I have designed and developed countless custom network automation and monitoring resources. Some of these are already documented at https://andrewburch.me/projects/, such as my Firewall Version Map, but I figured it would be easiest to detail all my various scripts in one location. The following information provides technical oversight into several of these scripts, but all sensitive and confidential information has been omitted due to the obvious security concerns of full-transparency.

Implementation

It will come as no surprise to those that know me how frequently I resort to shell scripts for basic (and even advanced) automation. The necessity for “more” is usually not all that necessary - Linux itself is capable of handling complex sequential operations from the command line much like what would be expected from Perl or Python, but without the additional dependence on changing syntaxes or version updates prevalent in “real” programming languages.

Wherever Bash was unable to accommodate my requirements, I used the Expect scripting language, an extension to the (T)ool (C)ommand (L)anguage (TCL), which together offer high-level, general-purpose, interpreted and dynamic development. Normally, Expect is most useful for automating interactions with programs exposing output to the terminal in a process called “screenscraping” (i.e. scraping the terminal output into a buffer for filtering and additional logic).

My hope is for the following examples to demonstrate evidence of the claim made above, and for readers to take away more than they came with when they opened this page.

Fiber Cut Detection

Between 2017 and 2018, an idea to monitor fiber optic rings was brought forward by my upper-management. Essentially, the idea was to have an email or some other sort of notification sent out to the network support teams whenever there was a suspected fiber cut between two sites in the ring. Naturally this idea was brought to my attention, being the only programmer on a team of network design engineers.

Due to my proclivity with Linux, I asserted I could accomplish the task with a Bash script by using SNMP to poll interface statuses on our datacenter routers since every site was given its own interface on these devices. Specifically, this command would be:

snmpget -v3 -l authPriv -u $user -a SHA -a $auth -x AES -X $priv $host $oid

Where:

$user- SNMPv3 username$auth- SNMPv3 authentication password$priv- SNMPv3 encryption key$host- IP address or hostname of the router$oid- SNMP OID to poll

The script would need to retrieve these fields from the user rather than store them on disk, which may have looked like the following:

# Ask user for SNMP info

oid="1.3.6.1.2.1.2.2.1.8"

echo -e "SNMP v3 username (-u): \\c"; read -r user

echo -e "SNMP v3 authentication key (-A): \\c"; read -r auth

echo -e "SNMP v3 privacy key (-X): \\c"; read -r priv

echo -e "Polling interval (s): \\c"; read -r poll_interval

echo -e "Alert after this many polls: \\c"; read -r alert_interval

# Ask user who we want to alert

echo -e "SMTP alert destination: \\c"; read -r email

The returned value indicates an “up” or “down” status of the specific interface polled. By running snmpget against every site’s interface and comparing all the up and down values in a known truth table, it was possible to determine between which two sites a cut was suspected.

# X / Y

[[ "$site1" == "up" &&

"$site2" == "up" &&

"$site3" == "down" &&

"$site4" == "up" &&

"$site5" == "down"

]] && echo "$(red "Fiber cut between X & Y!")" &&

( [[ -z "$msg" ]] && msg+="\\n" ) &&

msg+="Suspected fiber cut between X & Y." &&

sbj="Suspected Fiber Cut - X/Y"

To send the email, I used the mail command:

# Send email

if [[ ! -z "$msg" ]]; then

alert_count=$((alert_count + 1))

if (( alert_count == 1 || alert_count > alert_interval )); then

echo "$(yellow "Sending email...")"

echo -e "$msg" | mail -s "$sbj" email@address.com,"$email" -- -f sender@address.com

if (( alert_count > alert_interval )); then

alert_count=1

fi

fi

else

alert_count=0

fi

echo "$(yellow "Sending email (recovered)...")"

echo -e "All interfaces are back up." | mail -s "Interfaces Up" email@address.com,"$email" -- -f sender@address.com

# Sleep

echo "$(yellow "Sleeping ")$(cyan $poll_interval)$(yellow " seconds... ")"

sleep $poll_interval

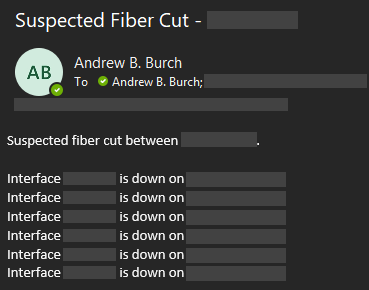

Example output would appear in our inboxes, which may have looked like the following (interface and site names redacted due to security concerns):

This script runs in an infinite loop, polling the routers continuously every 150 seconds to check for suspected fiber ring issues. More often than not, when cuts do occur, my team receives an email detailing between which two sites the cut has occurred before our commercial network monitoring solution is able to generate tickets for each interface - these tickets do not contain any identifying information like my emails do.

In a future revision, I would like to take advantage of the built-in (O)ptical (T)ime (D)omain (R)eflectometer (OTDR) to include how far along the fiber optic cable the cut has occurred - this would greatly speed up the dispatch and repair process involved when these events occur.

Switch Interface Flaps

In 2019, my team became aware of a potential Junos OS software bug impacting all (P)ower (o)ver (E)thernet (PoE) interfaces configured in the same slot and line card - in short, PoE would cease to operate as expected causing all PoE interfaces to flap temporarily before recovering.

The Junos OS naming convention is as follows:

ge-0/1/2

ge- gigabit ethernet port0- FPC 0 (slot/VC member 0)1- PIC 1 (module/line card 1)2- port 2

This means that if, for example, ports ge-0/0/0, ge-0/0/1, ge-0/0/2, and ge-0/1/0 were all configured as PoE interfaces, the first three in the list could be simultaneously impacted in a power-related event. This does not mean ge-0/1/0 would not be impacted, but due to the nature of the issue we were observing, the bug seemed to impact only those ports configured on the same FPC and PIC.

The proposed idea by my team was to build a script capable of monitoring interfaces grouped together in this fashion. Essentially, if multiple interfaces (6+) bounced simultaneously (or within the same ~2 second window) on any particular date, they wanted to know by an automated email alert. They also wanted to be sent the relevant lines from the switch logs.

Originally, I wrote this script using Expect and TCL, but after running into a few unforeseen issues with Expect’s buffer, I rewrote the entire thing using only Bash. The new design consisted of a myriad of improvements from the original, including archived logging and duplication comparison to ensure redundant emails would never be sent out. The following Bash script was developed in a single workday with pinpoint accuracy and can be executed against an arbitrary length list of switches, allowing the network engineer to monitor all configured interfaces on all Juniper devices across an entire enterprise.

#!/bin/bash

IFS=

# Local functions

get_first() {

echo "$(ssh_run "$1" "$2" "$3" "show log messages | match "^.*\[0-9]{2}\:\[0-9]{2}\:\[0-9]{2}.*\$" | no-more" | head -1)"

}

get_lines() {

mon=$(date '+%b')

day=$(($(cut_leading_zero "$(date '+%d')")))

yesterday_mon=$(date -d '-1 day' '+%b')

yesterday_day=$(($(cut_leading_zero "$(date -d '-1 day' '+%d')")))

if (( $yesterday_day < 10 )); then

yesterday_filter="$yesterday_mon $yesterday_day"

else

yesterday_filter="$yesterday_mon $yesterday_day"

fi

if (( $day < 10 )); then

filter="($yesterday_filter|$mon $day)"

else

filter="($yesterday_filter|$mon $day)"

fi

echo "$(ssh_run "$1" "$2" "$3" "show log messages | match \"^$filter.*SNMP_TRAP_LINK_DOWN.*\$\" | except \"^.*CMDLINE.*\$\" | no-more")"

}

get_last() {

echo "$(ssh_run "$1" "$2" "$3" "show log messages | last 1 | no-more" | head -1)"

}

is_int() {

echo "[[ $1 =~ ^-?[0-9]+$ ]]"

}

cut_leading_zero() {

echo "$(echo "$1" | sed 's/^0*//')"

}

get_month_from_line() {

echo "$1" | cut -f1 -d ' '

}

get_day_from_line() {

IFS=' '

d=""

read -ra arr <<< "$1"

for token in "${arr[@]}"; do

if [[ $token =~ ^[0-9]{1,2}$ ]]; then

d="$token"

break

fi

done

IFS=

echo "$d"

}

get_time_from_line() {

IFS=' '

t=""

read -ra arr <<< "$1"

for token in "${arr[@]}"; do

if [[ $token =~ ^([0-9]{2}\:){2}[0-9]{2}$ ]]; then

t="$(cut_leading_zero "$(echo "$token" | sed -e 's/://g')")"

break

fi

done

IFS=

echo "$t"

}

# Check number of arguments

if [[ $# -ge 2 ]]; then

echo "$(red "Unknown argument(s) were supplied")"

exit

elif [[ $# -eq 1 ]]; then

# If argument is a file which exists

if ! [[ -f "$1" ]]; then

echo "$(red "File $1 does not exist")"

exit

fi

fi

# Get list of host(s)

case $# in

# No file supplied as input, ask for one host

0 ) echo -e "Device (host/IP): \c"; read -r host

declare -a hosts=("$host")

;;

# File given, read into array

1 ) dos2unix "$1"

readarray -t hosts < "$1"

;;

esac

# Ask user for username

echo -e "Username: \c"; read -r user

# Ask user for password

echo -n "SSH password: "; read -rs pass && echo

# Ask user who we want to alert

echo -e "SMTP alert destination: \\c"; read -r alert

# Ask user for email subject

echo -e "SMTP subject: \\c"; read -r sbj

# Start date & time

start=$(get_date_time)

# Output directory

fold=$(mkdir_output "../output/$me-$start")

# Log file

echo -e "Log file name: \\c"; read -r log

email="$fold/$log"

# Ask user for polling interval in seconds

echo -e "Polling interval (s): \\c"; read -r sleeptime

if [[ $(is_int "$sleeptime") ]]; then

sleeptime=$(($(cut_leading_zero "$sleeptime")))

else

sleeptime=3600

fi

# Retrieve log lines

while true; do

count=0

for host in "${hosts[@]}"; do

[[ -z "$host" ]] && echo "$(red "Host was empty - skipping...")" && continue

echo ""

echo "$(yellow "Checking") $(cyan "$host")$(yellow "...")"

lines=$(get_lines "$user" "$pass" "$host")

num_lines=$(echo "$lines" | wc -l)

if (( $num_lines > 0 )); then

first=$(get_first "$user" "$pass" "$host")

last=$(get_last "$user" "$pass" "$host")

email_lines=()

while read -r line1; do

m1=$(get_month_from_line "$line1")

d1=$(($(get_day_from_line "$line1")))

t1=$(($(get_time_from_line "$line1")))

temp=()

if [[ $(is_int "$t1") ]]; then

ct=0

while read -r line2; do

if [[ "$line1" != "$line2" ]]; then

m2=$(get_month_from_line "$line2")

if [[ "$m1" != "$m2" ]]; then

continue

fi

d2=$(($(get_day_from_line "$line2")))

if (( $d1 != $d2 )); then

continue

fi

t2=$(($(get_time_from_line "$line2")))

if [[ $(is_int "$t2") ]]; then

dif=0

if (( $t1 < $t2 )); then

dif=$(($t2 - $t1))

else

dif=$(($t1 - $t2))

fi

if (( $dif <= 2 )); then

if ! [[ " ${email_lines[*]} " == *"$line1"* ]]; then

temp+=("$line1")

email_lines+=("$line1")

ct=$((ct + 1))

fi

if ! [[ " ${email_lines[*]} " == *"$line2"* ]]; then

temp+=("$line2")

email_lines+=("$line2")

ct=$((ct + 1))

fi

fi

fi

fi

done <<< "$lines"

if (( $ct <= 5 )); then

for line in "${temp[@]}"; do

echo "$(red "Ignoring:") $line"

for (( i = 0; i < ${#email_lines[@]}; i++ )); do

if [[ "${email_lines[i]}" == "$line" ]]; then

email_lines=("${email_lines[@]:0:$i}" "${email_lines[@]:$((i + 1))}")

i=$((i - 1))

fi

done

done

fi

fi

done <<< "$lines"

len=${#email_lines[@]}

if (( $len <= 1 )); then

echo "$(red "No log messages to report")"

continue

fi

echo "$(yellow "Found $len log messages - checking current log file...")"

if [[ -f "$email" && -s "$email" ]]; then

i=0

for line in "${email_lines[@]}"; do

if [[ ! -z $(grep -F "$line" "$email") ]]; then

echo "$(yellow "Present:") $line"

email_lines=("${email_lines[@]:0:$i}" "${email_lines[@]:$((i + 1))}")

i=$((i - 1))

len=$((len - 1))

fi

i=$((i + 1))

done

if (( $len <= 1 )); then

echo "$(red "No log messages to report")"

continue

fi

fi

echo "$(green "$len log messages to report")"

echo "$first" >> "$email"

count=$((count + 1))

IFS=$'\n'

email_lines_sorted=($(sort <<< "${email_lines[*]}"))

IFS=

for line in "${email_lines_sorted[@]}"; do

echo "$(cyan "Adding:") $line"

echo "$line" >> "$email"

count=$((count + 1))

done

echo "$last" >> "$email"

count=$((count + 1))

else

echo "$(red "No log messages to report")"

fi

done

# Email log if it exists and has been updated

if (( $count >= 1 )); then

echo "$(yellow "Sending email...")"

echo -e "New lines have been appended to the log ($count)." | mail -s "$sbj" -a "$email" email@address.com,"$alert" -- -f sender@address.com

fi

echo -e "\n$(yellow "Sleeping...")" && sleep $((sleeptime))

done

Palo Alto Asset Tracking

Aside from the visibility my Firewall Version Map project added to our firewall monitoring capabilities, an idea arrived on my desk to produce a weekly report detailing various additional metrics, such as the asset model name, latency, uptime, and serial number (serial numbers change frequently for hardware replacements) formatted in an Excel document.

To retrieve these metrics, I wrote a function named get_fw_info() that runs snmpget, nslookup, and ping and returns all the values in a single delimited line in .csv format:

get_fw_info() {

sn="$(snmpget -v2c -c "$2" "$1" "mib-2.47.1.1.1.1.11.1" | sed 's/^.\{44\}//' | tr -d '"')"

model="$(snmpget -v2c -c "$2" "$1" "mib-2.47.1.1.1.1.13.1" | sed 's/^.\{44\}//' | tr -d '"')"

version="$(snmpget -v2c -c "$2" "$1" "1.3.6.1.4.1.25461.2.1.2.1.1.0" | cut -f4 -d ' ' | tr -d '"')"

latency="$(ping -c 1 -w 1 "$1" | grep -E "^.*(icmp_seq).*$" | cut -f8 -d ' ' | tr -d 'time=')"

ip="$(ns_lookup_host "$1")"

sitecode="$(echo "$ip" | cut -f2 -d '.')"

location="$(snmpget -v2c -c "$2" "$1" "sysLocation.0" | sed 's/^.\{36\}//' | tr -d ',')"

uptime="$(snmpget -v2c -c "$2" "$1" "sysUpTimeInstance" | grep -o -E "[0-9]+\ (day|days)")"

if [[ -z "$uptime" ]]; then

uptime="0 days"

fi

echo "$1,$sn,$model,$version,$latency ms,$ip,$sitecode,$location,$uptime"

}

To automatically adjust the cell column width for each category, I wrote a function named get_longest() to determine the longest value:

get_longest() {

cat "$1" | cut -f "$2" -d ',' | awk '{print length, $0}' | sort -nr | head -1 | cut -f1 -d ' '

}

To convert the .csv to .xlsx, I embedded some Python code into the Bash script:

import xlsxwriter

import csv

wb = xlsxwriter.Workbook('$xlsx')

firewalls = wb.add_worksheet('Firewalls')

firewalls.write(0, 0, 'Firewall')

firewalls.write(0, 1, 'Serial Number')

firewalls.write(0, 2, 'Model')

firewalls.write(0, 3, 'Version')

firewalls.write(0, 4, 'Latency')

firewalls.write(0, 5, 'IP Address')

firewalls.write(0, 6, 'Site Code')

firewalls.write(0, 7, 'Location')

firewalls.write(0, 8, 'Uptime')

with open('$csvcsv', 'r') as f:

reader = csv.reader(f)

for r, row in enumerate(reader):

for c, col in enumerate(row):

firewalls.write(r + 1, c, col)

firewalls.set_column(0, 0, $longest_firewall)

firewalls.set_column(1, 1, 13)

firewalls.set_column(2, 2, $longest_model)

firewalls.set_column(3, 3, 7)

firewalls.set_column(4, 4, $longest_latency)

firewalls.set_column(5, 5, $longest_ip)

firewalls.set_column(6, 6, 9)

firewalls.set_column(7, 7, $longest_location)

firewalls.set_column(8, 8, $longest_uptime)

To mail the generated report out to our team, I used the mail command:

echo -e "Attached is the automatically generated Palo Alto Asset Tracking spreadsheet." | mail -s "Palo Alto Asset Tracker" -a "$xlsx" email@address.com,"$email" -- -f server@address.com

While I could have executed this code in a cron job, I ended up putting the code in an infinite loop with a configurable sleep time (echo -e "Sleeptime (s): \\c"; read -r sleeptime). To send the email out on a weekly schedule, as requested, I set this value to 604,800 (number of seconds in a week). My team would now receive an automatically generated report, once again improving network visibility.

VoIP Phone Rebooter

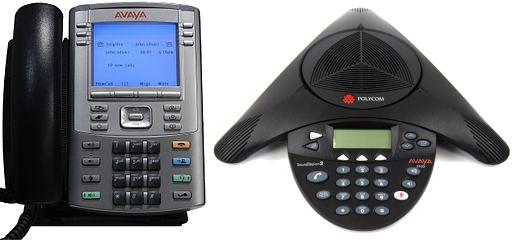

(V)oice (o)ver (I)(P) (VoIP) phones have become common in the workplace with the advancements made in bandwidth allocation to support UDP data streaming. Two IP phone vendors I have worked with include Avaya and Polycom, which use the (L)ink (L)ayer (D)iscovery (P)rotocol (LLDP) to advertise their identities and capabilities on the local area network.

Occasionally, especially during infrastructure firmware updates on our switches and firewalls, these phones would need to be rebooted to reset their connection to the network and function as intended. This process could either be performed manually (by physical disconnect of the Ethernet cable) or by individually cycling PoE on each interface in the switch configuration.

Both of these options quickly become monotonous and repetitive, given how routine upgrades are performed. While it is possible to reset PoE on all interfaces configured to support it with a single command, doing so could impact other PoE devices aside from the IP phones we were interested in targeting. For this reason, I was approached by one of our internal contractors to write a script that could reboot all the IP phones connected to any switch on the network (or a list of switches to make life even easier).

Because this work would require modification of the switch’s configuration, thorough testing would be necessary. It would also be impossible to use Bash on its own (much to my dissatisfaction), as a persistent connection would be required to run multiple commands based on previous terminal output and because of configure exclusive requiring a commit before exiting (otherwise, all staged changes would be lost).

As already mentioned, these phones advertise themselves over LLDP to the switch, and the information is viewable by running show lldp neighbors | match (Avaya|Polycom) | except Local | no-more - this command lists which interfaces have Avaya and Polycom devices connected to them in a list-like format.

To login to the switch and enter configuration mode, I spawned the SSH process and wrote a configure{} proc to handle the terminal interaction with the login prompt:

# Set up SSH connection

spawn ssh $user@$host

ssh_login $user $host $pass $log_master $log

# Enter configuration mode

configure $user 1 $log_master $log

proc ssh_login { user host pass args } {

set timeout 20

foreach file $args { log "Connecting via SSH to [ cyan $host ] as [ cyan $user ]..." $file }

expect {

"No route to host" {

foreach file $args { log_error "No route to $host - skipping" $file }

send "exit\r"

exit

}

"refused" {

foreach file $args { log_error "Connection to $host refused - skipping" $file }

send "exit\r"

exit

}

"resolve" {

foreach file $args { log_error "Could not resolve $host - skipping" $file }

send "exit\r"

exit

}

"timed out" {

foreach file $args { log_error "Timed out while connecting to $host - skipping" $file }

send "exit\r"

exit

}

timeout {

foreach file $args { log_error "Timed out while connecting to $host - skipping" $file }

send "exit\r"

exit

}

"(yes/no)?" {

send "yes\r"

foreach file $args { log "Added key from [ cyan $host ]" $file }

expect -re "\[Pp]assword\\:\ "

}

-re "(\[Pp]assword\\:\ |\[Pp]assword\\:)" {}

}

foreach file $args { log "Sending password..." $file }

send "$pass\r"

expect {

"Permission denied, please try again." {

foreach file $args { log_error "Permission denied - possible incorrect username / password" $file }

send "exit\r"

exit

}

-re "\[Pp]assword\\:\ " {

foreach file $args { log_error "$host is re-prompting for password - skipping" $file }

send "exit\r"

exit

}

timeout {

foreach file $args { log_error "Timed out while connecting to $host - skipping" $file }

send "exit\r"

exit

}

-re [ get_prompt ] { foreach file $args { log "Logged in as [ cyan $user ]" $file } }

}

}

proc configure { user excl args } {

if { $excl } {

send "configure exclusive\r"

} else {

send "configure\r"

}

expect {

"exclusive {master:0}" {

foreach file $args { log_error "Configuration is locked by another user in 'configure exclusive' - skipping" $file }

exit

}

"Users currently editing the configuration" {

foreach file $args { log_error "Configuration is being edited by another user - skipping" $file }

exit

}

"error: configuration database modified" {

foreach file $args { log_error "Configuration database modified (possibly changed but not committed) - skipping" $file }

exit

}

"The configuration has been changed but not committed" {

foreach file $args { log_error "Configuration was previously changed but not committed - skipping" $file }

send "exit\r\r"

exit

}

timeout {

foreach file $args { log_error "Timed out while entering configuration mode - skipping" $file }

exit

}

-re [ get_prompt ] { foreach file $args { log "Entered configuration mode" $file } }

}

}

To obtain a list of these devices, I created a new list and used lappend to append them to it after running show lldp neighbors. This code executes until the prompt is reached:

log "Checking LLDP neighbors..." $log_master $log

set phones [ new_list ]

pausend "run show lldp neighbors | match (Avaya|Polycom) | except Local | no-more\r"

while { 1 } {

expect {

-re "(Avaya|AVX|Polycom)" {

set interface [ get_lldp_neighbors_line $expect_out(buffer) ]

if { $interface ne "" && [ lsearch -exact $phones $interface ] == -1 } {

lappend phones $interface

log "IP phone detected on [ yellow $interface ]" $log_master $log

}

}

timeout {

log_error "Timed out while checking LLDP neighbors - skipping" $log_master $log

exit

}

-re [ get_prompt ] { break }

}

}

To disable PoE on the interfaces I had collected, I ran another command in a foreach loop:

set c 0

foreach phone $phones {

send "set poe interface $phone disable\r"

log "Disabling PoE on [ yellow $phone ]..." $log_master $log

expect {

timeout {

log "Timed out while disabling PoE on interface $phone - skipping..." $log_master $log

continue

}

-re [ get_prompt ] { incr c }

}

}

log "Disabled PoE on [ purple $c ] interfaces" $log_master $log

# Exit if nothing to commit

if { ! [ llength $phones ] } {

log_error "No IP phones with PoE enabled were detected on $host - skipping..." $log_master $log

exit

}

To apply the changes, I ran the commit and-quit command. To reboot the phones, and to restore the configuration back to the switch, I used the rollback 1 command:

# Commit changes

log "Committing configuration..." $log_master $log

send "commit and-quit\r"

sleep 10

expect {

timeout {

log_error "Timed out while committing configuration - check device manually" $log_master $log

send "exit\r"

exit

}

"error: commit failed" {

log_error "Could not commit configuration - skipping" $log_master $log

send "exit\r"

exit

}

-re [ get_prompt ] { log [ green "Committed configuration" ] $log_master $log }

}

# Reboot

if { $reboot } {

log "Rebooting [ purple [ llength $phones ] ] detected IP phones..." $log_master $log

configure $user 1 $log_master $log

log "Rolling back configuration..." $log_master $log

send "rollback 1\r"

expect {

timeout {

log_error "Timed out while rolling back for reboot" $log_master $log

exit

}

-re [ get_prompt ] {

log "Committing configuration..." $log_master $log

send "commit and-quit\r"

expect {

timeout {

log_error "Timed out while committing rollback for reboot" $log_master $log

exit

}

"error: commit failed" {

log_error "Could not rollback configuration for reboot" $log_master $log

exit

}

-re [ get_prompt ] { log [ green "Committed configuration" ] $log_master $log }

}

}

}

}

Over the last few years, this script has been used to restore IP phone functionality during upgrade windows and trusted to reboot phones during major network incidents at company call centers. Previously, this process could have taken hours to complete for each site - with my script, which can be provided an arbitrary list of switches, the maximum time is about a minute.

RFC1918 Route Collection

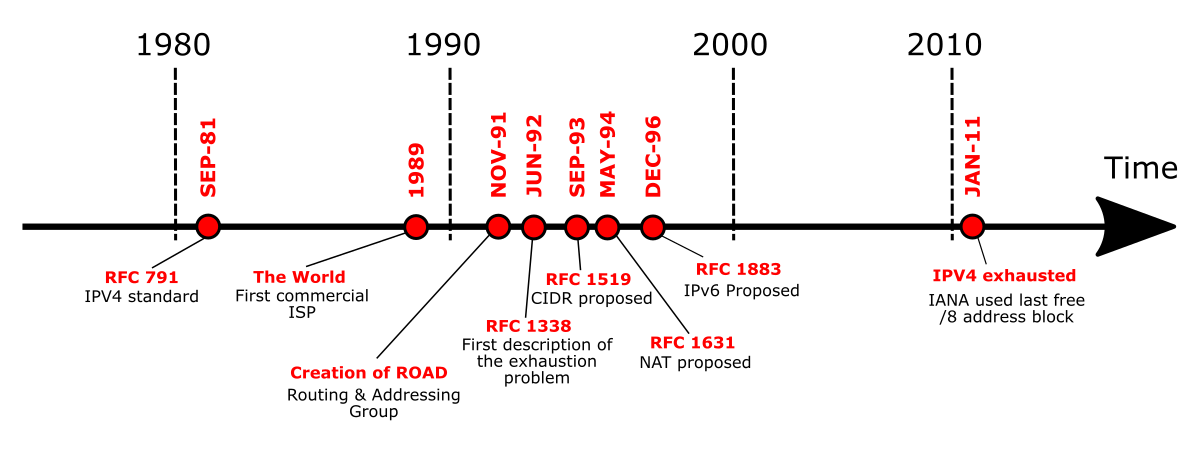

Every network engineer, at some point in their career, is made familiar with RFC1918, the Internet standard used to classify and categorize private IP address space. Because IPv4 utilizes 32-bit addressing, the maximum number of available addresses is 232 = 4,294,967,296 based upon its specification. Decades ago, it was believed this allocation of ~4.3 billion IP addresses would always be enough for all new devices ever to be connected to the Internet. Needless to say, this belief was clearly incorrect.

(N)etwork (a)ddress (t)ranslation (NAT) seeks to provide global address space conservation by translating public IP addresses on the Internet (WAN) to private IP addresses on the local network (LAN) and vice versa. Therefore, RFC1918 reserves three specific subnets for use in private networks:

10/8-10.0.0.0/8(10.255.255.255) (Class 1A/256B)172.16/12-172.16.0.0/12(172.31.255.255) (Class 16B)192.168/16-192.168.0.0/16(192.168.255.255) (Class 1B/256C)

The 10/8 subnet allocates (224 - 2) = 16,777,214 addresses, the 172.16/12 subnet allocates (220 - 2) = 1,048,574 addresses, and the 192.168/16 subnet allocates (216 - 2) = 65,534 addresses, all three of which can be and normally are used in any large enterprise’s private network. These allocations are further segmented and internally documented by the individual company based on the requirements of its own business needs.

It is not uncommon for security audits to scan all of RFC1918 on a company network. One such tool, called MASSCAN, can be used to scan RFC1918 in a matter of seconds as well as the entire Internet in under 6 minutes from a single machine. While these tools are impressive, unfortunately, they can cause performance problems due to (t)ime (t)o (l)ive (TTL) expiration on unused address space.

For example, if all of RFC1918 is to be scanned in an audit, but not all of RFC1918 is actually in use by the company, an extreme amount of unnecessary and excessive traffic will be sent down the wire and will not expire until the TTL limit is reached. Consequently, any links approaching bandwidth limits may be overrun and impact the business with an unanticipated outage.

One solution to resolve this problem is to scan only the subnets that are actually used by the company. However, given that modern networks are ever-changing, and subnets may be retired or provisioned at any time, how does one compile a list of the current subnets in use at the company? Naturally, a script must be the answer.

I was approached to design such a script in 2018. It became clear to me very quickly how dependent the design of this script would be based upon the layout of the individual company’s infrastructure, as the easiest way to obtain the list of in use subnets involves polling whichever devices are primarily responsible for routing traffic across the network. It also involves comparing generic routes against more specific routes contained within the generic routes, as specific routes are preferable when determining which address spaces are actually being used.

Due to security concerns, I am unable to comment on specific types of devices polled in relation to the network layout of my employer. Nevertheless, to obtain the route table from a router, the command may have looked like this:

routes=$(ssh_run "$username" "$password" "$router" "show route table | match \"^(1\.|10\.|1(7|9)2\.).*(Direct|OSPF|Static).*\$\" | except \"^(1\.|10\.)($site1|$site2|$site3)\..*\$\" | no-more" | cut -f1 -d ' ' | sort -u)

Where:

\"^(1\.|10\.|1(7|9)2\.).*(Direct|OSPF|Static).*\$\"- RFC1918 address spaceexcept \"^(1\.|10\.)($site1|$site2|$site3)\..*\$\"- specific site filteringsort -u- sort the list and remove duplicates

And ssh_run() is a function used to run a remote command by creating a temporary pipe with mkfifo and echoing the result generated by sshpass as the return value:

ssh_run() {

pipe=$(mktemp -u)

mkfifo -m 600 $pipe

exec 3<>$pipe

rm $pipe

echo $2 >&3

result=$(sshpass -d3 ssh $1@$3 -o StrictHostKeyChecking=no -o ConnectTimeout=5 -q -x $4)

exec 3>&-

echo "$result"

}

By using this command and similar commands against a list of specific devices responsible for routing traffic across the network, it was possible to generate a list of subnets from the in use RFC1918 address space. To ensure unique and sorted entries, and to double check all subnets were contained in RFC1918, the following code could be used:

# Remove duplicates and sort

arr=()

while read -r line; do

arr+=("$line")

done <<< "$routes"

# Sort, unique, remove any blanks and non-RFC1918

output=$(echo -e "${arr[@]}" | tr ' ' '\n' | sort -u | grep -E -v "^$|10\.0\.0\.0/8|172\.16\.0\.0/12|192\.168\.0\.0/16" | grep -E "^(1\.|10\.|1(7|9)2\.).*$")

To summarize all routes contained in $output, I used a Python utility called ipconflict:

ipconflict -f /path/to/subnets.txt

Once the script was refined enough to not produce conflicts (i.e. subnets with overlapping address spaces), I labeled it complete and set up an automated email to our audit teams as a drop-in replacement for their normal RFC1918 audits. This resulted in significantly less bandwidth utilization since the scan was targeted against networks that were actually being used by the company as opposed to all 17,891,322 IP addresses in RFC1918, reducing the risk of causing unanticipated outages.

Juniper Precaps

Precaps (short for precaptures) are, as the name implies, captures of device configurations and memory before network changes are made in a production environment. The idea is to generate precaps before and after changes are made in order to compare results and look for any discrepancies or other indications of unexpected behavior.

One of my first Expect scripts involved generating precaps for Juniper switches - these precaps would serve primarily as a backup in case we needed to manually rollback changes and secondarily as a diff for before and after comparisons.

Essentially, I needed to log the output from all specified commands twice - one for before and one for after changes were made. Some of these commands may have included:

show configuration

show ethernet-switching table

show route

show vlans

show chassis alarms

show chassis hardware

show arp no-resolve

show lldp neighbors

show ospf neighbor

show vrrp summary

show interfaces mc-ae

show log messages | last 300

Between the first and second execution, the Bash script calling the Expect script would prompt the user to enter continue before continuing:

echo "-----------------------------------------"

echo "The first capture has completed successfully."

echo "Make your changes to the device(s) captured above now."

echo "Do not terminate (ctrl+c) this script unless you"

echo "only wish to run a single capture without post-comparison."

echo "When you have finished, enter 'continue' to continue."

echo "-----------------------------------------"

prompt_force_continue

prompt_force_continue() {

while true; do

read -rp "Please enter 'continue' to continue. " input

case $input in

"continue" ) break; ;;

esac

done

}

To compare the before and after logs, I used diff -u:

diff -u before.txt after.txt 2> /dev/null > compare.txt

Palo Alto TSF

On the Palo Alto platform, it is possible to generate a complete export of all system logs in a single command called a (T)ech (S)upport (F)ile (TSF). This file is normally requested for support cases opened with the vendor so that their engineers can make recommendations to their customers (e.g. common triaging practices, RMAs, etc.).

Despite not being directly requested, a situation suggesting the development of a script arose in 2017 to support automated TSF analysis. Due to a known hardware defect, a specific firewall model number was being returned to the vendor - for my team, this meant uploading a generated TSF from every firewall affected by the defect to the support case in order to begin the RMA process. To generate the TSF, I used an Expect script:

# Generate TSF

log "Requesting TSF for [ cyan $host ]..." $log_master $log

pausend "request tech-support dump\r"

set job ""

expect {

"Server error : Another user activity report or techsupport generation is pending. Please try again later." {

log_error "Failed to request TSF (another generation request is already pending)" $log_master $log

send "exit\r"

exit

}

-re [ get_prompt ] {

foreach line [ split $expect_out(buffer) "\n" ] {

if { [ string trim $line ] ne "" && [ regexp "jobid \[0-9]+" $line ] } {

set job [ string trim [ lindex [ split $line " " ] end ] ]

log "Requested TSF for [ cyan $host ], jobid is [ purple $job ]" $log_master $log

break

}

}

}

timeout {

log_error "Timed out while requesting TSF for $host" $log_master $log

send "exit\r"

exit

}

}

if { $job eq "" } {

log_error "Failed to request TSF for $host" $log_master $lo

send "exit\r"

exit

}

# Keep checking job progress until done

while { 1 } {

send "show jobs id $job\r"

set fin 0

expect {

-re [ get_prompt ] {

foreach line [ split $expect_out(buffer) "\n" ] {

if { [ string trim $line ] ne "" } {

set status "Progress of job [ purple $job ] on [ cyan $host ]:"

if { [ regexp "\[0-9]+\%" $line ] } {

log "$status [ yellow [ string trim [ lindex [ split $line " " ] end ] ] ]" $log_master $log

} elseif { [ string match "*FIN*" $line ] } {

log "$status [ green "100%" ]" $log_master $log

set fin 1

break

}

}

}

}

timeout {

log_error "Timed out while checking progress of job $job on $host" $log_master $log

send "exit\r"

exit

}

}

if { $fin } { break }

sleep 30

}

To automatically export the TSF directly to the support case, I used the scp export tech-support command targeting tacupload.paloaltonetworks.com:

# Export TSF

log "Exporting TSF from [ cyan $host ]..." $log_master $log

send "scp export tech-support to $tac_case@tacupload.paloaltonetworks.com:/\r"

while { 1 } {

expect {

"(yes/no)?" {

send "yes\r"

log "Added key from [ cyan $host ]" $log_master $log

}

-re "\[Pp]assword\\:\ " {

log "Sending TAC password..." $log_master $log

send "$tac_pass\r"

while { 1 } {

expect {

".tar.gz" {

set timeout 10

while { 1 } {

log "Exporting TSF..." $log_master $log

expect -re [ get_prompt ] { break }

}

log [ green "Export successful" ] $log_master $log

send "exit\r"

break

}

timeout {

log_error "Timed out while sending SCP password" $log_master $log

send "exit\r"

exit

}

}

}

break

}

-re "(timed\ out|lost\ connection)" {

log_error "Timed out connection (lost connection)" $log_master $log

send "exit\r"

exit

}

timeout { log "Waiting for SCP password prompt..." $log_master $log }

}

}

This script has been used sparingly over the years and is more useful when multiple firewalls need to be supported by the vendor at once.

Palo Alto Backups

Similar to the previous script, I figured it would be useful to automatically export backups of the firewall configs on a regular schedule using the scp export device-state command. This would save my team lots of time from having to take care of this process manually - even though it was not requested, I wrote another script:

# Export device state

log "Exporting device state..." $log_master $log

send "scp export device-state to $user_server@$server:$path_server/$firewall-[ get_date_time ].tar.gz\r"

expect {

"(yes/no)? " {

send "yes\r"

log "Accepted ECDSA key fingerprint" $log_master $log

expect {

"password: " {

log "Sending password..." $log_master $log

send "$pass_server\r"

}

}

}

"password: " {

log "Sending password..." $log_master $log

send "$pass_server\r"

}

timeout {

log_error "Timed out while attempting to scp device state from $firewall (#1) - skipping..." $log_master $log

exit

}

}

expect {

"lost connection" {

log_error "Lost connection while attempting to scp device state from $firewall - skipping..." $log_master $log

exit

}

timeout {

log_error "Timed out while attempting to scp device state from $firewall (#2) - skipping..." $log_master $log

exit

}

-re [ get_prompt ] {

log [ green "Export successful" ] $log_master $log

}

}

Running the above code in a loop produces regular backups stored in .tar.gz archives on the specified server, which can be retrieved at a later date if they are needed. These backups have saved us countless hours of rebuilding configurations when all other recovery operations failed.

SNMP Thresholds

Due to the overwhelming number of custom SNMP monitoring requests on various platforms, I decided to write a generic SNMP monitoring script capable of sending out email alerts if specific and configurable thresholds were violated. This script could be designed entirely in Bash as it did not require a persistent or interactive connection to the target devices.

I started by requesting information from the user, similar to all my other scripts:

# Ask user for SNMP info

echo -e "SNMP OID: \\c"; read -r oid

echo -e "SNMP index ($oid.???): \\c"; read -r index

echo -e "SNMP community string: \\c"; read -r community

# Ask user who we want to alert

echo -e "SMTP alert destination: \\c"; read -r email

echo -e "Email subject: \\c"; read -r subject

# Ask user for threshold

echo -e "Threshold: \\c"; read -r threshold

# Ask user for polling interval

echo -e "Polling interval: \\c"; read -r sleeptime

From here, all the script needs to do is perform the snmpget query repeatedly based on the parameters specified by the user above.

# Perform snmpget repeatedly

while true; do

for host in "${hosts[@]}"; do

echo "$(yellow "Retrieving index $(cyan "$index")$(yellow " of OID ")$(cyan "$oid")$(yellow " on host ")$(cyan "$host")$(yellow "...")")"

value=$(snmpget -v2c -c "$community" "$host" "$oid.$index" | cut -f4 -d ' ')

! [[ "$value" =~ ^[0-9]+$ ]] && echo "$(red "Error - failed to receive numerical value via SNMP")" && continue

if (( value >= threshold )); then

echo "$(red "Warning - value $value exceeds threshold $threshold on host $host...")"

echo "Warning - value $value received from snmpget ($oid.$index) exceeds set threshold $threshold on host $host at $(get_date_time)." \

| mail -s "$subject" email@address.com,"$email" -- -f email.address.com

else

echo "$(green "Received ok value $value from host $host...")"

fi

done

sleep $(($sleeptime))

done

Because this code is generic enough to support any SNMP OID index, it can be executed against any platform supporting SNMP polling (as long as the MIB(s) are known). This script has been used for all sorts of purposes, and I was even able to teach one of our contractors some basic shell scripting by showing him this code. He went on to create a few of his own custom monitoring resources based on my examples.

Palo Alto License Fix

In early 2022, my team was approached to resolve a licensing issue present on all of our corporate firewalls at the company. Unfortunately, it was an issue requiring us to manually access every firewall, execute a few commands in sequence, commit the device configuration and validate the change was successful as it could not be resolved any other way - not with Panorama, its management platform, or its exposed XML API. Fortunately, it was the perfect use case for an Expect/TCL script.

The estimated time for one person to complete this work manually, assuming this person has other commitments to perform their main duties, could be multiple work days or even multiple work weeks. Seeing the potential for automation, in about one hour I developed, tested, and scheduled a specific approved time to execute a script to automate this task for me. With my script, which calculates an ETA for me, it was estimated to run for several hours (in reality, it took just over 10 hours). This script follows:

#!/usr/bin/expect -f

# Imports

source "../api/tcl/api.tcl"

source_all [ find_sources "../api/tcl" "*" ]

# Make sure correct number of args were supplied

if { $argc ne 4 } {

announce "Incorrect number of args supplied" "-"

exit

}

# Set args to variables

set firewall [ lindex $argv 0 ]

set user_firewall [ lindex $argv 1 ]

set pass_firewall [ lindex $argv 2 ]

set fold [ lindex $argv 3 ]

# Make sure all variables are not empty

if { $firewall eq "" || $user_firewall eq "" || $pass_firewall eq "" || $fold eq "" } {

announce "Usage: <firewall> <user firewall> <pass firewall> <output directory>" "-"

exit

}

# Logs

set log_master "$fold/log-master.txt"

set log "$fold/log-$user_firewall@$firewall.txt"

# Set up SSH connection

spawn ssh $user_firewall@$firewall

ssh_login $user_firewall $firewall $pass_firewall $log_master $log

# Set timeout to 60 seconds

set timeout 60

# Fix license

log "Deleting license key..." $log_master $log

send "delete license key PAN_DB_URL*\r"

expect {

"successfully removed PAN_DB_URL" {

log [ green "Deletion successful" ] $log_master $log

}

timeout {

log_error "Timed out while deleting license key - skipping..." $log_master $log

exit

}

-re [ get_prompt ] {

log [ yellow "Deletion possibly unsuccessful, prompt was reached before any success message" ] $log_master $log

}

}

clear_buffer

log "Requesting license fetch..." $log_master $log

send "request license fetch\r"

expect {

"License entry" {

send "q"

expect {

-re [ get_prompt ] {

log [ green "License fetch successful (#2)" ] $log_master $log

}

timeout {

log_error "Timed out while requesting license - skipping (#2)..." $log_master $log

exit

}

}

}

timeout {

log_error "Timed out while requesting license - skipping (#1)..." $log_master $log

exit

}

}

clear_buffer

log "Entering configuration mode..." $log_master $log

send "configure\r"

expect {

"Entering configuration mode" {

log "Entered configuration mode" $log_master $log

}

timeout {

log_error "Timed out while entering configuration mode - skipping..." $log_master $log

exit

}

}

clear_buffer

set timeout 600

log "Committing configuration..." $log_master $log

send "commit force\r"

expect {

"Configuration committed successfully" {

log [ green "Commit successful" ] $log_master $log

}

"failed" {

log_error "Commit failed - skipping..." $log_master $log

exit

}

timeout {

log_error "Timed out while committing - skipping..." $log_master $log

exit

}

}

clear_buffer

set timeout 60

log "Exiting configuration mode..." $log_master $log

send "exit\r"

expect {

"Exiting configuration mode" {

log "Exited configuration mode" $log_master $log

}

timeout {

log_error "Timed out while exiting configuration mode - skipping..." $log_master $log

exit

}

}

clear_buffer

log "Checking url-cloud status..." $log_master $log

send "show url-cloud status\r"

expect {

"valid" {

log [ green "Check successful, url-cloud status valid" ] $log_master $log

}

"expired" {

log_error "Check successful, url-cloud status expired" $log_master $log

}

timeout {

log_error "Timed out while checking url-cloud status" $log_master $log

}

}

done $log_master $log

This script allowed us to restore a valid license on all of our corporate firewalls, avoiding a lapse in support from the vendor and a lot of manual labor. Due to my quick thinking and cost savings solution, I was awarded with my second “Leaving It Better Award” (the first of which was in 2017) which included special waste elimination recognition at the company and a significant cash bonus for my extracurricular efforts.

Palo Alto System Environmentals

In late 2022, I ran into an issue with an older script I wrote and used to monitor for power supply alarms in the Palo Alto firewalls. In the past, my team had been late to observe these alarms until physical inspections were made, which were usually random based on the current task at hand. This is of course troubling for any infrastructure analyst supporting the hardware in question, where network visibility and health performance metrics are important.

In short, my old script was designed to SSH into each firewall, run a command, and check the output for the “True” keyword to detect power supply alarms. Unfortunately, Palo Alto changed the formatting of this command on the newer hardware - specifically, in our case, on the PA-440s - and thus broke the script’s functionality and generated a false alarm.

To remediate this issue, I rewrote the script with several enhancements in mind. Instead of accessing the firewalls over SSH and parsing the output of a command, I leveraged the exposed XML API on the PA platform, which permits the user to access the system with a persistent token string known as an API key.

Fortunately, Palo Alto firewalls expose their entire system environmentals metrics through a single URI (/api/?type=op&cmd=<show><system><environmentals></environmentals></system></show>). This meant that with my new more robust script, I could monitor much more than just power supply alarms; these include any anomalies returned in the XML output, such as ambient area temperatures, fan tray RPM and power rail voltage fluctuations. The following script was produced in a single morning and is currently being used to monitor system environment data of over 200 corporate security devices:

#!/bin/bash

# Imports

source ../api/bash/api.sh

source_all

# Check number of arguments

if [[ $# -ge 2 ]]; then

echo "$(red "Unknown argument(s) were supplied")"

exit

elif [[ $# -eq 1 ]]; then

# If argument is a file which exists in same directory

if ! [[ -f "$1" ]]; then

echo "$(red "File $1 does not exist in $(pwd)")"

exit

fi

fi

# Get list of host(s)

case $# in

# No file supplied as input, ask for one host

0 ) echo -e "Device (host/IP): \c"; read -r host

declare -a hosts=("$host")

;;

# File given, read into array

1 ) dos2unix "$1"

readarray -t hosts < "$1"

;;

esac

# Ask user for username

echo -e "Username: \c"; read -r user

# Ask user for password

echo -n "XML API password: "; read -rs pass && echo

pass="${pass/$'}'/$'\}'}"

# Ask user for Panorama hostname

echo -e "Panorama (host/IP): \c"; read -r panorama

# Ask user who we want to alert

echo -e "SMTP alert destination: \\c"; read -r alert

# Ask user for email subject

echo -e "SMTP subject: \\c"; read -r sbj

# Ask user for polling interval in seconds

echo -e "Polling interval (s): \\c"; read -r sleeptime

if [[ $(is_int "$sleeptime") ]]; then

sleeptime=$(($(cut_leading_zero "$sleeptime")))

else

sleeptime=3600

fi

echo

# Request API key

echo "$(yellow "Requesting API key...")"

key="$(get_pa_api_key "$user" "$pass" "$panorama")"

# Check key

if [[ "$key" != "" ]]; then

echo "$(green "Success: $key")"

else

echo "$(red "Failed to parse API key from XML")"

exit

fi

echo

# Retrieve info

while true; do

results=""

# Iterate through all hosts

for host in "${hosts[@]}"; do

[[ -z "$host" ]] && break

echo "$host:"

# Get system environmentals

xml=$(curl -ks --connect-timeout 20 "https://$host/api/?type=op&cmd=<show><system><environmentals></environmentals></system></show>&key=$key")

start=false

alarm=""

description=""

while read_xml; do

[[ "$TAG" == "result" ]] && start=true

[[ "$start" != true ]] && continue

[[ "$TAG" == "/result" ]] && break

[[ "$TAG" == "alarm" ]] && alarm="$CONTENT"

[[ "$TAG" == "description" ]] && description="$CONTENT"

if [[ "$alarm" != "" && "$description" != "" ]]; then

result="$description: $alarm"

if [[ "$alarm" != "False" ]]; then

results="$results\n$host:\n$result"

echo "$(red "$result")"

else

echo "$(green "$result")"

fi

alarm=""

description=""

fi

done < <(echo "$xml")

echo

done

# Send email if needed

if [[ ! -z "$results" ]]; then

echo "$(yellow "Sending email...")"

echo -e "Possible firewall system environmental alarms detected.\n$results" | mail -s "$sbj" "$alert" -- -f email@address.com

echo

fi

results=""

echo -e "$(yellow "Sleeping...")" && sleep $((sleeptime))

done

An example of expected output, indicating no alarms:

Fan Tray: False

Power Supply #1 (left): False

Power Supply #2 (right): False

Temperature @ Rear Left[U54]: False

Temperature @ Front Left[U62]: False

Temperature @ Rear Right[U121]: False

Temperature @ Front Right[U101]: False

Temperature: 73XX DP Core: False

Temperature: Qumran Switch Core: False

Temperature: FE100 Core: False

Temperature: Broadwell MP Core: False

Fan #1 RPM: False

Fan #2 RPM: False

Fan #3 RPM: False

Power: DP - Switch 1.0V Core: False

Power: DP - Switch 1.0V Serdes: False

Power: DP - 2.5V Power Rail: False

Power: DP - 3.3V Power Rail: False

Power: CE - CE10 1.2V Power Rail: False

Power: CE - CE10 1.8V Power Rail: False

Power: CE - CE10 0.95V Power Rail: False

Power: CE - PCIe SWT 1.0V Power Rail: False

Power: CE - CE10 MGTAVCC 1.0V: False

Power: CE - CE10 MGTAVCC 1.2V: False

Power: CE - CE10 1.35V Power Rail: False

Power: CE - CE10 MGTAVCC 1.8V: False

Power: DP - 0.9V Power Rail: False

Power: MP - 1.8V VCCIN: False

Power: MP - 1V05: False

Power: MP - 1V2_VDDQ: False

Power: MP - 0V6_VTT: False

Power: MP - 1V3_VCCKRHV: False

Power: MP - 1V5_PCH: False

Power: MP - 1V7_SUS: False

Power: MP - 2V5_DDR4: False

Power: MP - 3V3_PCH: False

Power: MP - 3V3: False

Power: DP - 0.95V TCAM: False

Power: MP - 5V0: False

Power: DP - 0.95V NP: False

Power: DP - 1.028V VCS: False

Power: DP - 1.2V Power Rail: False

Power: DP - 1.2V NP: False

Power: DP - 1.5V Power Rail: False

Power: DP - 1.8V Power Rail: False

An example of alarm output, generating an email (1.1V Power Rail: True):

Temperature near CPLD (inlet): False

Temperature near Cavium (outlet): False

Temperature near Management Port (inlet): False

Temperature near Switch (midboard): False

Temperature @ Cavium Core: False

Fan #1 RPM: False

Fan #2 RPM: False

Fan #3 RPM: False

0.85V Power Rail: False

0.9V Power Rail: False

1.0V Power Rail: False

1.1V Power Rail: True

1.2V Power Rail: False

1.5V Power Rail: False

1.8V Power Rail: False

2.5V Power Rail: False

3.3V Power Rail: False

3.3V SD Power Rail: False

Results

While I could probably close this project, I am keeping it open so I can append more examples of basic network automation in the future. There will always be another script to write, and there will always be something to automate or improve upon.

I have written countless other scripts to support this ongoing endeavor, but felt that the examples I have included on this page do well to document some of the more interesting ideas I have implemented. Until I decide to document more examples, I hope that the information above will be insightful to those readers interested in automated network support.